This setup allows us to experiment with OSP director in our own laptop, play with the existing Heat templates, create new ones and understand how TripleO is used to install OpenStack, from the confort of your laptop.

VMware Fusion Professional version is used, but this will also work in VMware Workstation with virtually no changes and in vSphere or VirtualBox with an equivalent setup.

This guide uses the official Red Hat documentation, in particular the Director Installation and Usage.

Architecture

Architecture diagram

Standard RHEL OSP 7 architecture with multiple networks, VLANs, bonding and provisioning from the Undercloud / director node via PXE.

Networks and VLANs

No especial setup is needed for enabling VLAN support in VMware Fusion, we just set the VLANs and their networks in RHEL as usual.

DHCP and PXE

DHCP and PXE are provided by the Undercloud VM.

NAT

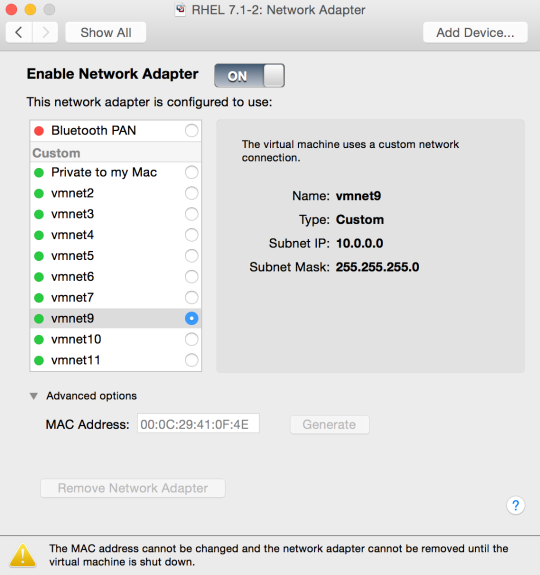

VMware Fusion NAT will be used to provide external access to the Controller and Compute VMs via the provisioning and external networks. The VMware Fusion NAT below, configures 10.0.0.2 in your Mac OS X as the default gateway for the VMs, which will be used in the TripleO templates as the default gateway IP.

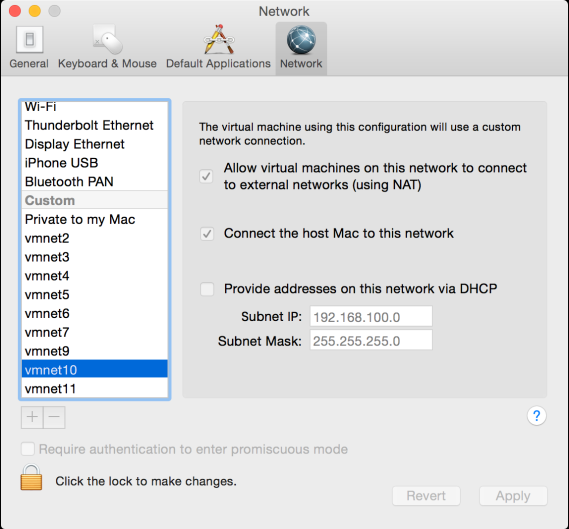

VMware Fusion Networks

The networks are configured in the VMware Fusion menu in Preferences, then Network.

The provisioning (PXE) network is set up in vmnet9, the rest of the networks in vmnet10.

The above describes the architecture of our laptop lab in VMware Fusion. Now, let’s implement it.

Step 1. Create 3 VMs in VMware Fusion

VM specifications

| VM | vCPUs | Memory | Disk | NICs | Boot device |

| Undercloud | 1 | 3000 MB | 20 GB | 2 | Disk |

| Controller | 2 | 3000 MB | 20 GB | 3 | 1st NIC |

| Compute | 2 | 3000 MB | 20 GB | 3 | 1st NIC |

Disk size

You may want to increase the disk size for the controller to be able to test more or larger images and to the compute node to be able to run more or larger instances. 3GB of memory is enough if you include a swap partition for the compute and controller.

VMware network driver in .vmx file

Make sure the network driver in the three VMs is vmxnet3 and not e1000 so that RHEL shows all of them:

$ grep ethernet[0-9].virtualDev Undercloud.vmwarevm/Undercloud.vmx ethernet0.virtualDev = "vmxnet3" ethernet1.virtualDev = "vmxnet3"

ethX vs enoX NIC names

By default, the OSP director images have the kernel boot option net.ifnames=0. This will name the network interfaces as ethX as opposed to enoX. This is why in the Undercloud the interface names are eno16777984 and eno33557248 (default net.ifnames=1) and the Controller and Compute VMs have eth0, eth1 and eth2. This may change in RHEL OSP 7.2.

Undercloud VM Networks

This is the mapping of VMware networks to OS NICs. A OVS bridge br-ctlplane will be created automatically by the installation of the Undercloud.

| Networks | VMware Network | RHEL NIC |

| External | vmnet10 | eno33557248 |

| Provisioning | vmnet9 | eno16777984 / br-ctlplane |

Copy the MAC addresses of the controller and compute VMs

Make a note of the MAC addresses of the first vNIC in the Controller and Compute VMs.

Step 2. Install the Undercloud

Install RHEL 7.1 in your preferred way in the Undercloud VM and then configure it as follows.

Network interfaces

First, set up the network. 192.168.100.10 will be the external IP in eno33557248 and 10.0.0.10 the provisioning IP in eno16777984.

In /etc/sysconfig/network-scripts/ifcfg-eno33557248

TYPE=Ethernet BOOTPROTO=none DEFROUTE=yes NAME=eno33557248 DEVICE=eno33557248 ONBOOT=yes IPADDR=192.168.100.10 PREFIX=24 GATEWAY=192.168.100.2 DNS1=192.168.100.2

And in /etc/sysconfig/network-scripts/ifcfg-eno16777984

TYPE=Ethernet BOOTPROTO=none DEFROUTE=yes NAME=eno16777984 DEVICE=eno16777984 ONBOOT=yes IPADDR=10.0.0.10 PREFIX=24

Once the network is set up ssh from your Mac OS X to 192.168.100.10 and not to 10.0.0.10 because the latter will be automatically reconfigured during the Undercloud installation to become the IP of the bridge called br-ctrlplane and you would lose access during the reconfiguration.

Undercloud hostname

The Undercloud needs a fully qualified domain name and it also needs to be present in the /etc/hosts file. For example:

# sudo hostnamectl set-hostname undercloud.osp.poc

And in /etc/hosts:

192.168.100.10 undercloud.osp.poc undercloud

Subscribe RHEL and Install the Undercloud Package

Now, subscribe the RHEL OS to Red Hat’s CDN and enable the required repos.

Then, install the OpenStack client plug-in that will allow us to install the Undercloud

# yum install -y python-rdomanager-oscplugin

Create the user stack

After that, create the stack user, which we will use to do the installation of the Undercloud and later the deployment and management of the Overcloud.

Configure the director

The following undercloud.conf file is a working configuration for this guide, which is mostly self-explanatory.

For a reference of the configuration flags, there’s a documented sample in /usr/share/instack-undercloud/undercloud.conf.sample

Become the stack user and create the file in its home directory.

# su - stack $ vi ~/undercloud.conf

[DEFAULT] image_path = /home/stack/images local_ip = 10.0.0.10/24 undercloud_public_vip = 10.0.0.11 undercloud_admin_vip = 10.0.0.12 local_interface = eno16777984 masquerade_network = 10.0.0.0/24 dhcp_start = 10.0.0.50 dhcp_end = 10.0.0.100 network_cidr = 10.0.0.0/24 network_gateway = 10.0.0.10 discovery_iprange = 10.0.0.100,10.0.0.120 undercloud_debug = true [auth]

The masquerade_network config flag is optional as in VMware Fusion we already have NAT as explained above, but it might be needed if you use VirtualBox.

Finally, get the Undercloud installed

We will run the installation as the stack user we created

$ openstack undercloud install

Step 3. Set up the Overcloud deployment

Verify the undercloud is working

Load the environment first, then run the service list command:

$ . stackrc

$ openstack service list +----------------------------------+------------+---------------+ | ID | Name | Type | +----------------------------------+------------+---------------+ | 0208564b05b148ed9115f8ab0b04f960 | glance | image | | 0df260095fde40c5ab838affcdbce524 | swift | object-store | | 3b499d3319094de5a409d2c19a725ea8 | heat | orchestration | | 44d8d0095adf4f27ac814e1d4a1ef9cd | nova | compute | | 84a1fe11ed464894b7efee7543ecd6d6 | neutron | network | | c092025afc8d43388f67cb9773b1fb27 | keystone | identity | | d1a85475321e4c3fa8796a235fd51773 | nova | computev3 | | d5e1ad8cca1549759ad1e936755f703b | ironic | baremetal | | d90cb61c7583494fb1a2cffd590af8e8 | ceilometer | metering | | e71d47d820c8476291e60847af89f52f | tuskar | management | +----------------------------------+------------+---------------+

Configure the fake_pxe Ironic driver

Ironic doesn’t have a driver for powering on and off VMware Fusion VMs so we will do it manually. We need to configure the fake_pxe driver for this.

Edit /etc/ironic/ironic.conf and add it:

enabled_drivers = pxe_ipmitool,pxe_ssh,pxe_drac,fake_pxe

Then restart ironic-conductor and verify the driver is loaded:

$ sudo systemctl restart openstack-ironic-conductor $ ironic driver-list +---------------------+--------------------+ | Supported driver(s) | Active host(s) | +---------------------+--------------------+ | fake_pxe | undercloud.osp.poc | | pxe_drac | undercloud.osp.poc | | pxe_ipmitool | undercloud.osp.poc | | pxe_ssh | undercloud.osp.poc | +---------------------+--------------------+

Upload the images into the Undercloud’s Glance

Download the images that will be used to deploy the OpenStack nodes to the directory specified in the image_path in the undercloud.conf file, in our example /home/stack/images. Get the images and untar them as described here. Then upload them into Glance in the Undercloud:

$ openstack overcloud image upload --image-path /home/stack/images/

Define the VMs into the Undercloud’s Ironic

TripleO needs to know about the nodes, in our case the VMware Fusion VMs. We describe them in the file instackenv.json which we’ll create in the home directory of the stack user.

Notice that here is where we use the MAC addresses we took from the two VMs.

{

"nodes": [

{

"arch": "x86_64",

"cpu": "2",

"disk": "20",

"mac": [

"00:0c:29:8f:1e:7b"

],

"memory": "3000",

"pm_type": "fake_pxe"

},

{

"arch": "x86_64",

"cpu": "2",

"disk": "20",

"mac": [

"00:0C:29:41:0F:4E"

],

"memory": "3000",

"pm_type": "fake_pxe"

}

]

}

Import them to the undercloud:

$ openstack baremetal import --json instackenv.jsonThe command above adds the nodes to Ironic:

$ ironic node-list +--------------------------------------+------+--------------------------------------+-------------+-----------------+-------------+ | UUID | Name | Instance UUID | Power State | Provision State | Maintenance | +--------------------------------------+------+--------------------------------------+-------------+-----------------+-------------+ | 111cf49a-eb9e-421d-af05-35ab0d74c5d6 | None | 941bbdf9-43c0-442e-8b65-0bd531322509 | power off | available | False | | e579df9f-528f-4d14-94bc-07b2af4b252f | None | f1bd425b-a4d9-4eca-8bc4-ee31b300e381 | power off | available | False | +--------------------------------------+------+--------------------------------------+-------------+-----------------+-------------+

To finish the registration of the nodes we run this command:

$ openstack baremetal configure boot

Discover the nodes

At this point we are ready to start discovering the nodes, i.e. having Ironic powering them on, booting with the discovery image that was uploaded before and then shutting them down after the relevant hardware information has been saved in the node metadata in Ironic. This process is called introspection.

Note that as we use the fake_pxe driver, Ironic won’t power on the VMs, so we do it manually in VMware Fusion. We wait until the output of ironic node-list tells us that the power state is on and then we run this command:

$ openstack baremetal introspection bulk start

Assign the roles to the nodes in Ironic

There are two roles in this example, compute and control. We will assign them manually with Ironic.

$ ironic node-update 111cf49a-eb9e-421d-af05-35ab0d74c5d6 add properties/capabilities='profile:compute,boot_option:local' $ ironic node-update e579df9f-528f-4d14-94bc-07b2af4b252f add properties/capabilities='profile:control,boot_option:local'

Create the flavors in Glance and associate them with the roles in ironic

This consists in creating the flavors matching the specs of the VMs and then adding the property control and compute to the corresponding flavors to match Ironic’s as done in the previous step. Then, it also requires a flavor called baremetal.

$ openstack flavor create --id auto --ram 3000 --disk 17 --vcpus 2 --swap 2000 compute $ openstack flavor create --id auto --ram 3000 --disk 19 --vcpus 2 --swap 1500 control

TripleO also needs a flavor called baremetal (which we won’t use):

$ openstack flavor create --id auto --ram 3000 --disk 19 --vcpus 2 baremetal

Notice the disk size is 1 GB smaller than the VM’s disk. This is a precaution to avoid No valid host found when deploying with Ironic, which sometimes is a bit too sensitive.

Also, notice that I added swap because 3 GB of memory is not enough and the out of memory killer could be triggered otherwise.

Now we make the flavors match with the capabilities we set in the Ironic nodes in the previous step:

$ openstack flavor set --property "cpu_arch"="x86_64" --property "capabilities:boot_option"="local" --property "capabilities:profile"="control" control $ openstack flavor set --property "cpu_arch"="x86_64" --property "capabilities:boot_option"="local" --property "capabilities:profile"="compute" compute

Step 4. Create the TripleO templates

Get the TripleO templates

Copy the TripleO heat templates to the home directory of the stack user.

$ mkdir ~/templates $ cp -r /usr/share/openstack-tripleo-heat-templates/ ~/templates/

Create the network definitions

These are our network definitions:

| Network | Subnet | VLAN |

| Provisioning | 10.0.0.0/24 | VMware native |

| Internal API | 172.16.0.0/24 | 201 |

| Tenant | 172.17.0.0/24 | 204 |

| Storage | 172.18.0.0/24 | 202 |

| Storage Management | 172.19.0.0/24 | 203 |

| External | 192.168.100.0/24 | VMware native |

To allow creating dedicated networks for specific services we describe them in a Heat template that we can call network-environment.yaml.

$ vi ~/templates/network-environment.yaml

resource_registry:

OS::TripleO::Compute::Net::SoftwareConfig: /home/stack/templates/nic-configs/compute.yaml

OS::TripleO::Controller::Net::SoftwareConfig: /home/stack/templates/nic-configs/controller.yaml

parameter_defaults:

# The IP address of the EC2 metadata server. Generally the IP of the Undercloud

EC2MetadataIp: 10.0.0.10

# Gateway router for the provisioning network (or Undercloud IP)

ControlPlaneDefaultRoute: 10.0.0.2

DnsServers: ["10.0.0.2"]

InternalApiNetCidr: 172.16.0.0/24

TenantNetCidr: 172.17.0.0/24

StorageNetCidr: 172.18.0.0/24

StorageMgmtNetCidr: 172.19.0.0/24

ExternalNetCidr: 192.168.100.0/24

# Leave room for floating IPs in the External allocation pool

ExternalAllocationPools: [{'start': '192.168.100.100', 'end': '192.168.100.200'}]

InternalApiAllocationPools: [{'start': '172.16.0.10', 'end': '172.16.0.200'}]

TenantAllocationPools: [{'start': '172.17.0.10', 'end': '172.17.0.200'}]

StorageAllocationPools: [{'start': '172.18.0.10', 'end': '172.18.0.200'}]

StorageMgmtAllocationPools: [{'start': '172.19.0.10', 'end': '172.19.0.200'}]

InternalApiNetworkVlanID: 201

StorageNetworkVlanID: 202

StorageMgmtNetworkVlanID: 203

TenantNetworkVlanID: 204

# ExternalNetworkVlanID: 100

# Set to the router gateway on the external network

ExternalInterfaceDefaultRoute: 192.168.100.2

# Set to "br-ex" if using floating IPs on native VLAN on bridge br-ex

NeutronExternalNetworkBridge: "br-ex"

# Customize bonding options if required

BondInterfaceOvsOptions:

"bond_mode=active-backup"

More information about this template can be found here.

Configure the NICs of the VMs

We have examples of NIC configurations for multiple networks and bonding in /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/

We will use them as a template to define the Controller and Compute NIC setup.

$ mkdir ~/templates/nic-configs/

$ cp /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/* ~/templates/nic-configs/

Notice that they are called from the previous template network-environment.yaml.

Controller NICs

We want this setup in the controller:

| Bonded Interface | Bond Slaves | Bond Mode |

| bond1 | eth1, eth2 | active-backup |

| Networks | VMware Network | RHEL NIC |

| Provisioning | vmnet9 | eth0 |

| External | vmnet10 | bond1 / br-ex |

| Internal | vmnet10 | bond1 / vlan201 |

| Tenant | vmnet10 | bond1 / vlan204 |

| Storage | vmnet10 | bond1 / vlan202 |

| Storage Management | vmnet10 | bond1 / vlan203 |

We only need to modify the resources section of the ~/templates/nic-configs/controller.yaml to match the configuration in the table above:

$ vi ~/templates/nic-configs/controller.yaml

[...]

resources:

OsNetConfigImpl:

type: OS::Heat::StructuredConfig

properties:

group: os-apply-config

config:

os_net_config:

network_config:

-

type: interface

name: nic1

use_dhcp: false

addresses:

-

ip_netmask:

list_join:

- '/'

- - {get_param: ControlPlaneIp}

- {get_param: ControlPlaneSubnetCidr}

routes:

-

ip_netmask: 169.254.169.254/32

next_hop: {get_param: EC2MetadataIp}

-

type: ovs_bridge

name: {get_input: bridge_name}

addresses:

- ip_netmask: {get_param: ExternalIpSubnet}

routes:

- ip_netmask: 0.0.0.0/0

next_hop: {get_param: ExternalInterfaceDefaultRoute}

dns_servers: {get_param: DnsServers}

members:

-

type: ovs_bond

name: bond1

ovs_options: {get_param: BondInterfaceOvsOptions}

members:

-

type: interface

name: nic2

primary: true

-

type: interface

name: nic3

-

type: vlan

device: bond1

vlan_id: {get_param: InternalApiNetworkVlanID}

addresses:

-

ip_netmask: {get_param: InternalApiIpSubnet}

-

type: vlan

device: bond1

vlan_id: {get_param: StorageNetworkVlanID}

addresses:

-

ip_netmask: {get_param: StorageIpSubnet}

-

type: vlan

device: bond1

vlan_id: {get_param: StorageMgmtNetworkVlanID}

addresses:

-

ip_netmask: {get_param: StorageMgmtIpSubnet}

-

type: vlan

device: bond1

vlan_id: {get_param: TenantNetworkVlanID}

addresses:

-

ip_netmask: {get_param: TenantIpSubnet}

outputs:

OS::stack_id:

description: The OsNetConfigImpl resource.

value: {get_resource: OsNetConfigImpl}

Compute NICs

In the compute node we want this setup:

| Bonded Interface | Bond Slaves | Bond Mode |

| bond1 | eth1, eth2 | active-backup |

| Networks | VMware Network | RHEL NIC |

| Provisioning | vmnet9 | eth0 |

| Internal | vmnet10 | bond1 / vlan201 |

| Tenant | vmnet10 | bond1 / vlan204 |

| Storage | vmnet10 | bond1 / vlan202 |

$ vi ~/templates/nic-configs/compute.yaml

[...]

resources:

OsNetConfigImpl:

type: OS::Heat::StructuredConfig

properties:

group: os-apply-config

config:

os_net_config:

network_config:

-

type: interface

name: nic1

use_dhcp: false

dns_servers: {get_param: DnsServers}

addresses:

-

ip_netmask:

list_join:

- '/'

- - {get_param: ControlPlaneIp}

- {get_param: ControlPlaneSubnetCidr}

routes:

-

ip_netmask: 169.254.169.254/32

next_hop: {get_param: EC2MetadataIp}

-

default: true

next_hop: {get_param: ControlPlaneDefaultRoute}

-

type: ovs_bridge

name: {get_input: bridge_name}

members:

-

type: ovs_bond

name: bond1

ovs_options: {get_param: BondInterfaceOvsOptions}

members:

-

type: interface

name: nic2

primary: true

-

type: interface

name: nic3

-

type: vlan

device: bond1

vlan_id: {get_param: InternalApiNetworkVlanID}

addresses:

-

ip_netmask: {get_param: InternalApiIpSubnet}

-

type: vlan

device: bond1

vlan_id: {get_param: StorageNetworkVlanID}

addresses:

-

ip_netmask: {get_param: StorageIpSubnet}

-

type: vlan

device: bond1

vlan_id: {get_param: TenantNetworkVlanID}

addresses:

-

ip_netmask: {get_param: TenantIpSubnet}

outputs:

OS::stack_id:

description: The OsNetConfigImpl resource.

value: {get_resource: OsNetConfigImpl}Enable Swap

Enabling the swap partition is done from within the OS. Ironic only creates the partition as instructed in the flavor. This can be done with the templates that allow running first boot scripts via cloud-init.

First, the template for running at cloud-init userdata /home/stack/templates/firstboot/firstboot.yaml

resource_registry: OS::TripleO::NodeUserData: /home/stack/templates/firstboot/userdata.yaml

Then, the actual script for enabling swap /home/stack/templates/firstboot/userdata.yaml

heat_template_version: 2014-10-16

resources:

userdata:

type: OS::Heat::MultipartMime

properties:

parts:

- config: {get_resource: swapon_config}

swapon_config:

type: OS::Heat::SoftwareConfig

properties:

config: |

#!/bin/bash

swap_device=$(sudo fdisk -l | grep swap | awk '{print $1}')

if [[ $swap_device && ${swap_device} ]]; then

rc_local="/etc/rc.d/rc.local"

echo "swapon $swap_device " >> $rc_local

chmod 755 $rc_local

swapon $swap_device

fi

outputs:

OS::stack_id:

value: {get_resource: userdata}

Step 5. Deploy the Overcloud

Summary

We have everything we need to deploy now:

- The Undercloud configured.

- Flavors for the compute and controller nodes.

- Images for the discovery and deployment of the nodes.

- Templates defining the networks in OpenStack.

- Templates defining the nodes’ NICs configuration.

- A first boot script used to enable swap.

We will use all this information when running the deploy command:

$ openstack overcloud deploy \ --templates templates/openstack-tripleo-heat-templates/ \ -e templates/openstack-tripleo-heat-templates/environments/network-isolation.yaml \ -e templates/network-environment.yaml \ -e templates/firstboot/firstboot.yaml \ --control-flavor control \ --compute-flavor compute \ --neutron-tunnel-types vxlan --neutron-network-type vxlan \ --ntp-server clock.redhat.com

After a successful deployment you’ll see this:

Deploying templates in the directory /home/stack/templates/openstack-tripleo-heat-templates [...] Overcloud Endpoint: http://192.168.100.100:5000/v2.0/ Overcloud Deployed

An overcloudrc file with the environment is created for you to start using the new OpenStack environment deployed in your laptop.

Step 6. Start using the Overcloud

Now we are ready to start testing our newly deployed platform.

$ . overcloudrc $ openstack service list +----------------------------------+------------+---------------+ | ID | Name | Type | +----------------------------------+------------+---------------+ | 043524ae126b4f23bd3fb7826a557566 | glance | image | | 3d5c8d48d30b41e9853659ce840ae4fe | neutron | network | | 418d4f34abe449aa8f07dac77c078e9c | nova | computev3 | | 43480fab74fd4fd480fdefc56eecfe83 | cinderv2 | volumev2 | | 4e01d978a648474db6d5b160cd0a71e1 | nova | compute | | 6357f4122d6d41b986dab40d6fb471e3 | cinder | volume | | a49119e0fd9f43c0895142e3b3f3394a | keystone | identity | | b808ae83589646e6b7033f2b150e7623 | horizon | dashboard | | d4c9383fa9e94daf8c74419b0b18fd6e | heat | orchestration | | db556409857d4d24872cdc1b718eee8f | swift | object-store | | ddc3c82097d24f478edfc89b46310522 | ceilometer | metering | +----------------------------------+------------+---------------+

![RHEL OSP 7 in laptop - [racedo]](https://trickycloud.files.wordpress.com/2015/11/rhel-osp-7-in-laptop-racedo.jpg?w=585&h=509)